Insights

Crawl Budget For Large Sites – What Every SEO Should Know?

On Digitals

19/01/2026

47

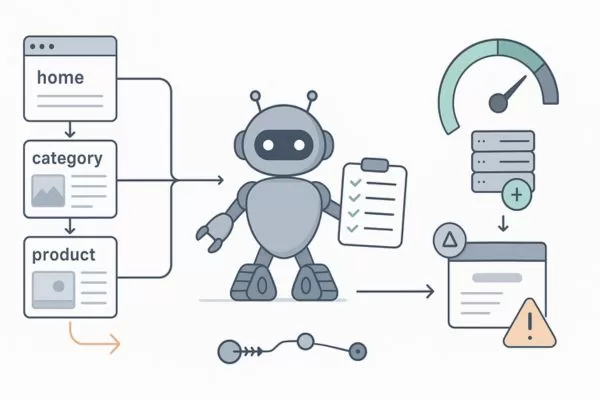

A crawl budget for large sites is vital for ensuring search engines efficiently index all your important pages. Managing it properly can boost SEO performance and prevent wasted server resources. Let’s dive in to explore how large websites can optimize their crawl budget effectively!

Why is crawl budgets for large sites worth learning about?

Crawl budget for large sites is essential to ensure Google efficiently indexes all important pages. It determines how many pages and how much bandwidth Googlebot will use on your site within a given time. Large or frequently updated sites must pay special attention to their crawl budget to maintain optimal search visibility.

The crawl budget depends on two main factors: crawl capacity, which is how many connections Googlebot can open without overloading your server, and crawl demand, which reflects the importance and freshness of your content. To better plan your site updates, you can learn how to calculate crawl budget for large websites, ensuring Googlebot uses its resources efficiently.

Simply put, if a site has a million pages and Googlebot crawls 500,000 daily, the effective crawl budget is about 500K pages per day. Properly managing these factors helps large websites maximize SEO performance and server efficiency.

Effectively optimizing the crawl budget for large sites ensures that Googlebot focuses on the most valuable pages first. Strategies include improving site structure, eliminating low-value pages, and managing URL parameters.

Understanding how Google indexes extensive site structures effectively

Why crawl budget optimization is critical for large websites

For large websites, efficient crawling is crucial to ensure that important pages are indexed quickly. Without a proper crawl budget for large sites management, new content and updates may be missed by search engines. Optimizing this crawl budget helps search engines focus on high-value pages and improves overall SEO performance.

Optimizing crawl budget allows Googlebot to focus on high-value pages first. This ensures that the most important pages of a large website are crawled and ranked regularly. If you need to manage a large number of pages, this will be a godsend because whichever page that is posted will be considered for indexing promptly.

As a result, proper crawl budget management helps search engines prioritize pages that drive traffic and conversions. Large websites benefit by avoiding wasted time on low-value or duplicate content.

Not only that, an efficient crawl budget prevents search engines from wasting resources on unimportant or broken pages. Large websites can maintain server health while improving overall SEO performance without worrying about too many errors, which can be difficult and time-wasting to fix on a large-scale site.

The must-know importance of managing crawl budget for large sites

Effective strategies to optimize crawl budget for large websites

For large websites, effective crawl budget management ensures search engines index high-value pages efficiently. Implementing the right techniques prevents wasted crawling and improves overall SEO performance.

Crawl error solutions for large sites

Crawl budget for large sites can be wasted if search engines encounter crawl errors like broken links or 404 pages. Regularly check Google Search Console to identify these issues. Fixing errors and redirect chains ensures bots can access important pages without interruption.

Creating a better site structure for SEO

A well-structured site helps search engines navigate pages more easily. Following crawl budget best practices for large sites, such as using clear hierarchies and logical internal links, ensures that important pages are discovered and indexed promptly.

Configuring Robots.txt to protect crawl budget

Proper crawl budget for large sites management involves configuring robots.txt correctly. Block non-essential pages such as admin panels while keeping important resources accessible. Regular audits ensure crawlers follow the intended rules.

XML sitemap tips for better indexing

XML sitemaps help search engines discover valuable pages on large websites. Submit sitemaps to Google Search Console and update them regularly. Exclude duplicate or low-value pages to maintain crawl efficiency.

Managing duplicate content carefully

Crawl budget for large sites is negatively affected by duplicate content. Apply canonical tags and 301 redirects to merge duplicate pages. This ensures bots focus on the preferred content version.

Giving priority to key pages for better SEO

Direct crawl focuses toward pages that generate traffic or leads. Use internal linking and frequent updates to signal their importance. Reduce emphasis on outdated or low-quality pages to optimize crawl efficiency.

Effective pagination strategies for websites

Crawl budget for large sites can be impacted by poorly handled pagination. Use rel=”next” and rel=”prev” tags to indicate page sequences. Ensure key content is accessible without excessive crawling through multiple layers. This is especially important when managing crawl budget for e-commerce sites, where product pages and categories need to be crawled efficiently.

Reducing media size to improve crawl efficiency

Large media files can slow down crawl rates. Optimize images and videos for smaller file sizes while keeping quality intact.Hosting media on a CDN reduces server load and improves bot efficiency.

Tracking crawl budget for large sites

Monitoring tools help track how Googlebot interacts with your website. Crawl budget for large sites can be optimized by analyzing these stats. Address pages that are over-crawled or under-crawled to improve indexing efficiency.

Implement schema markup to improve indexing

Using structured data makes it easier for search engines to understand your pages. Add schema markup to products, events, and FAQs. Testing structured data ensures errors do not prevent proper crawling and indexing.

Increasing crawl efficiency through faster pages

Crawl budget for large sites can be limited by slow-loading pages. Optimize core web vitals, use a CDN, and minimize HTTP requests. Faster pages allow bots to crawl more pages per session efficiently.

Removing obsolete pages to improve SEO

Conduct content audits to identify pages with little value. Consolidate, improve, or remove these pages. Using a 410 status code for permanently removed pages signals search engines not to waste crawl budgets.

Enabling HTTPS for better large site performance

Crawl budget for large sites benefits from HTTPS adoption. Secure your site with an SSL certificate and redirect HTTP URLs to HTTPS. Update sitemaps, robots.txt, and canonical tags to reflect the secure URLs.

Optimizing crawl frequency

Adjust crawl frequency for pages that change often to ensure timely indexing. Use XML sitemap priority settings to guide bots. Avoid frequent crawling of low-value pages to save crawl budget.

Practical tips to enhance indexing and site performance

Common crawl budget mistakes large sites should avoid

Steering clear of common crawl budget errors ensures key pages are indexed efficiently. Large websites especially must pay attention to these errors to maximize crawling efficiency.

Orphan pages

Pages on your site can be wasted when they are not linked internally. Orphan pages have no other pages pointing to them, making it hard for search engines to discover and index them. This leads to valuable content remaining hidden from search results, negatively affecting crawl budget for large sites.

To fix orphan pages, create a clear internal linking structure across your website. Ensure that every page is reachable from at least one other page. Regularly audit your site to identify and connect orphan pages to the main navigation.

Non-optimized URLs

URLs with random characters, excessive parameters, or unclear structures can confuse search engines. These non-optimized URLs may lead to multiple versions of the same page being crawled unnecessarily. This wastes server resources and reduces the efficiency of indexing important pages.

To optimize URLs, create clean, descriptive, and consistent URL structures for all pages. Avoid duplicate parameters and unnecessary query strings. Implementing proper URL optimization strategies ensures the best use of your crawl budget for large sites.

Low-value content that hinders crawl efficiency

Large websites often have pages with little or outdated content that consume crawl resources unnecessarily. Extra care is needed when managing crawl budget for multilingual websites, as multiple language versions can quickly consume crawl resources. The crawl budget can be wasted if bots spend time on low-value pages instead of important ones. Identifying and managing these pages ensures that search engines focus on what really matters.

To address low-value pages, conduct regular content audits to find underperforming or obsolete pages. Consolidate similar pages, improve thin content, or remove them entirely. This practice improves overall crawl efficiency and helps large sites maintain SEO performance.

Frequent errors that hinder effective search engine crawling

Crawl budget tracking tools for large websites

Tracking crawl activity is essential for large websites to ensure search engines index important pages efficiently. Using the right tools helps identify issues and optimize crawl budget for maximum SEO performance.

Google Search Console

Crawl budget for large sites can be monitored effectively using Google Search Console. The “Crawl Stats” report shows how often your pages are crawled and highlights any errors that may occur. Regularly checking this data helps ensure bots are crawling the right pages.

Submit sitemaps and request indexing for specific pages to improve visibility. Address crawl errors promptly to prevent waste of crawl budget. Google Search Console also allows monitoring of blocked or unreachable pages.

Website Analytics Tools

Analytics platforms like Google Analytics provide insights into which pages are actually visited by users. This helps identify pages that may not be crawled effectively. Monitoring user engagement indirectly informs crawl priorities.

By analyzing traffic and behavior patterns, you can adjust your site structure or internal linking. This makes sure important pages receive proper attention. Regularly reviewing analytics data helps maintain optimal crawl efficiency.

Crawl monitoring tools

Detailed crawl monitoring tools, such as Screaming Frog or Botify, provide in-depth information on how bots interact with your site. They highlight slow-loading pages, errors, or excessive crawling of low-value content. Using these insights helps improve crawl performance.

For large websites, the crawl budget for large sites can be preserved by fixing identified issues. Regular use of monitoring tools ensures bots focus on important pages. This allows large sites to maximize indexing efficiency and overall SEO results.

Useful tools to monitor and analyze site crawling efficiency

How On Digitals can help optimize crawl budget for large sites

When working with On Digitals, improving crawl budget for large sites becomes fast and efficient. Large websites require careful management of crawl activity to ensure important pages are discovered. The agency ensures that bots focus on the pages that matter most for SEO visibility.

Crawl budget refers to the rate at which search engine bots crawl your website at any given time. For large websites, proper management guarantees that high-priority pages are indexed quickly. This process improves overall search engine rankings and drives better results.

On Digitals offers tailored SEO services for large sites that continue to grow in size and complexity. As new pages are added, the agency adapts its crawl budget strategy to maintain efficiency. This ensures that search engines always index the most important content, regardless of site scale.

Ways an SEO agency improves crawling for complex websites

FAQs on improving crawl budget for large sites

Optimizing crawl budget is essential for large websites to ensure search engines index the most important pages efficiently. Many site owners are unsure how to prioritize pages, fix errors, or manage site structure for better crawling. These FAQs answer the most common questions and provide practical tips to improve crawl budget for large sites.

How can crawl budgets be optimized for big websites?

To optimize crawl budget on a large website, start by identifying crawl errors and fixing broken links. Improve your site architecture by creating a clear hierarchy and using proper internal linking to guide search engine bots. Remove redundant or low-value pages and ensure the XML sitemap and Robots.txt are optimized for proper crawling. Regularly monitor your site and adjust the strategy as new content is added or the website evolves to maintain optimal crawling efficiency.

How do broken links influence crawl budgets on large sites?

Crawl errors, such as 404 pages or broken links, can waste crawl budgets for large sites and prevent bots from reaching important pages. These issues slow down indexing and reduce opportunities for higher search rankings. By identifying and fixing crawl errors, you ensure that search engines focus their resources on valuable content that drives traffic and SEO performance.

How does crawl rate differ from crawl demand?

The crawl rate refers to how quickly search engine bots can crawl pages on your website, while crawl demand depends on the popularity, freshness, and relevance of your content. Pages that are important or recently updated require a higher crawl rate to ensure they are indexed promptly. Properly balancing crawl rate and crawl demand helps optimize crawl budget for large sites, ensuring bots focus on high-value pages efficiently.

What methods help prioritize pages for crawl budget efficiency?

Websites often have many pages, but not all are equally important. By prioritizing high-value pages – such as product pages, popular blog posts, or key service pages – you ensure search engines focus their crawl budget on content that drives traffic and conversions. Proper internal linking, updated sitemaps, and frequent content audits help maintain an efficient crawl budget over time.

Answers to common questions about enhancing site crawl efficiency

Conclusion

Crawl budget for large sites is essential for ensuring that search engines efficiently crawl and index your most important pages. Proper management prevents wasted resources and maximizes SEO performance.

With the expertise of On Digitals, large websites can optimize their crawl budget effectively and achieve better visibility in search results with our SEO services.

Read more